- Part One: Deep dive into five Mapper Archetypes. Each showcases how we’ve observed individuals, trios, and entire teams become overwhelmed by their trees.

- Part Two (this post): Interview with Dr. Else van der Berg (product management, discovery & validation enthusiast) on tactics she uses within her trios to keep noise down and signal high.

Real World Tactics (An Interview)

Now that we’ve looked at five archetypes that introduce noise and chaos, let’s look at some real-world tactics you can use today to avoid falling prey to them. Dr. Else van der Berg is a discovery and validation enthusiast working as an interim product lead within startups and scale-ups. We reached out to Else after observing how she integrates data from surveys, product analytics, and the ~30 monthly interviews she conducts with customers. Despite working with a ton of data, Else drives clarity, focus, and progress. ## Step One: Arrive at a Product OutcomeCreate Alignment | Business to Product Outcomes

Before identifying the right product outcome, Else starts by looking at the business outcomes. She looks for something she can work with, avoiding anything overly generic or “fluffy”. This is important because if you don’t work from a business outcome (or with outcomes in general), you risk becoming a Wandering Mapper.Else: “I started by aligning on a product metric – without that, I can’t really start discovery.”Going from business to product outcomes is a large topic, definitely out of scope for this interview, but if this is something you’re working to improve, Else went on to share a post she wrote on the topic – How to connect business metrics to customer opportunities. You may also find this post on using KPI Trees to break down and identify clear goals helpful.

Co-create ONE Product Outcome

Esle: “We got alignment around our product outcome by co-creating it, which is a luxury in a startup/scale-up because the right people are willing to get involved, which really helps.”We love this for several reasons:

- ✅ Co-created with the right people. This builds trust and incorporates additional business context.

- ✅ Starting with a business outcome and narrowing the scope. Starting an OST with a hard-to-move Business outcome will result in way too much noise. Breaking them down into product outcomes narrows the focus and prevents you from turning into an Indecisive Mapper later on.

Else: “As a product lead, I would put in a lot of effort to get every product manager to one outcome, not three, especially in a startup or scale-up. It takes a lot of effort to really move one outcome for one ICP (Ideal Customer Profile). I feel like there should be one ICP and one outcome for each product manager.”If you can limit the number of outcomes, great! You’ll be taking steps to avoid becoming an Overtaxed Mapper, and you won’t constantly pay the learning tax for each one. However, creating this change can be countercultural.

Don’t include every insight

ICPs help businesses define segments they want to target. In discovery, this can be used to qualify interviewees and collect the right data. Else goes on to share the importance of collecting all of the ICP definitions floating around the business and narrowing them down to one for the selected outcome to build an OST around. Then shares a quick warning:Else: “If you ever try to build an opportunity solution tree with three very different ICPs it gets really bloated and really broad. I like to avoid it if we don’t have PMF anywhere.”Q: What if you pick the wrong ICP?

Else: “If you don’t discover great opportunities in your selected ICP, that’s fine, pivot and start again. What you don’t want to do is try running multiple ICPs through an OST at the same time because it’s an absolute nightmare”We didn’t explore ICPs or segmentation in the first post in this series, but have shared patterns we’ve encountered from other teams dealing with different customer segments. We can second that if you have too many “Ideal Customers” or segments in your tree, things will get messy fast.

Embrace the unknown, set a Learning Goal

Q: What happens when you’re working with a new product outcome? When there are a lot of unknowns?Else: “I feel like a lot of companies have this knee-jerk response to say “We need to have this cool product outcome. Let’s just pick something.” Sometimes, they also feel that they need to have a performance goal because everybody also wants to say, “We are going to get a 5% increase in XYZ”, and they actually aren’t mature enough to set a performance goal because they don’t have a clue how to move it. They are totally new to this metric, they don’t have a baseline, no experience with it, and they have no idea what strategies might increase it by 5%.”Q: How do you go about setting a learning goal and when do you move to a performance-based one?

Else: “Learning goals are often a bridge to performance goals. So I’ll usually have a team dedicated to something—let’s say, increasing the user activation rate—but they have no idea how to actually do that or what product metric is associated with it. So the first learning goal can be “Identify which customer behavior in the product shows that a user is activated or has reached Aha. As an example, we think that when users take an action, say “Tracking time” they start to activate. When we’ve learned that users who have tracked time at least 5 times over the past 2 weeks have reached Aha, we can do either: 1. Set a performance goal: Increase the % of new users who track time at least 5 times in their first 2 weeks to x% (but might be still too soon for that!) OR 2. Set learning goal 2: Identify 3 strategies that are likely to have a positive impact on the % of new users who track time at least 5 times in their first 2 weeks (evidence backed).”If you don’t know, that’s okay! Learning goals are great for transitioning from a business outcome to a performance-based product outcome, all while preventing you from becoming an Over-Mapper**.

Step Two: Mapping Opportunities

Narrow vs Broad Scope

Moving into the opportunity space, Else describes two approaches to uncovering opportunities to drive the product outcome.- Broad Scope discovery, which she conducts in user interviews using story-based interview techniques to uncover macro needs and pain points (opportunities).

- Narrow scope discovery, where she shadows users as they interact with the app to observe where they stumble and experience friction (great for identifying incremental improvements).

Else: “Usually, with startups, the real question is, what is our differentiator? Why is our product any good? Why does anyone really care? So I usually pick broad scope to figure out where the opportunities lie.”

Go broad and embrace discomfort (for now)

When Else starts conducting interviews, she uses story-based interviewing techniques to uncover opportunities. Usually, this involves going broad at first, which means a lot of opportunities.Else: “It’s quite likely you’ll have 100 potential opportunities, and it gets really messy. I just plug them all in there and let it get really broad and horrible with no structure, and I’m okay with that because I’ll clean it up later. I’d feel uncomfortable not plugging them in because I want to start counting how many times something was brought up.”Else then showed us how she’s using Vistaly’s insights to capture how many times users brought up an opportunity by attributing quotes and notes from interviews to opportunities. She pointed out how she leverages Vistaly’s insight count to acts as a simple proxy for how many times something is brought up.

Else: “Mainly top-level [opportunities], except when it’s really obvious. Then I’ll have a pruning session later where I bring in the structure a lot more. In the beginning, you’ll be wrong anyway. Sometimes, it’s super obvious what the parent should be. Or there is already a structure forming, but I don’t want to artificially bring in structure which isn’t really there when I don’t know what the final picture is going to look like.”

Don’t reach for opportunities that aren’t there

When it comes to interviewing, Else shared this warning about reaching for opportunities.Else: “I’ve noticed that people distill things from conversations that were not really said by ‘reaching for opportunities’. So what I do is only plug in an opportunity when it comes from someone who really fits our ICP and only when they brought it up themselves. I don’t ask, ‘Hey, is this a pain point for you?’ and wait for, ‘Yeah, I guess so.’”This is a great example of using segmentation to filter opportunities and story-based interviewing techniques to avoid becoming an Assumptive Mapper or an Over-Mapper.

Step Three: Pruning Opportunities

With a whole mess of opportunities, now it’s time to clean things up. Else walked us through a workshop she runs with her team every time she needs to prune the tree and prioritize opportunities.Workshop Preparation

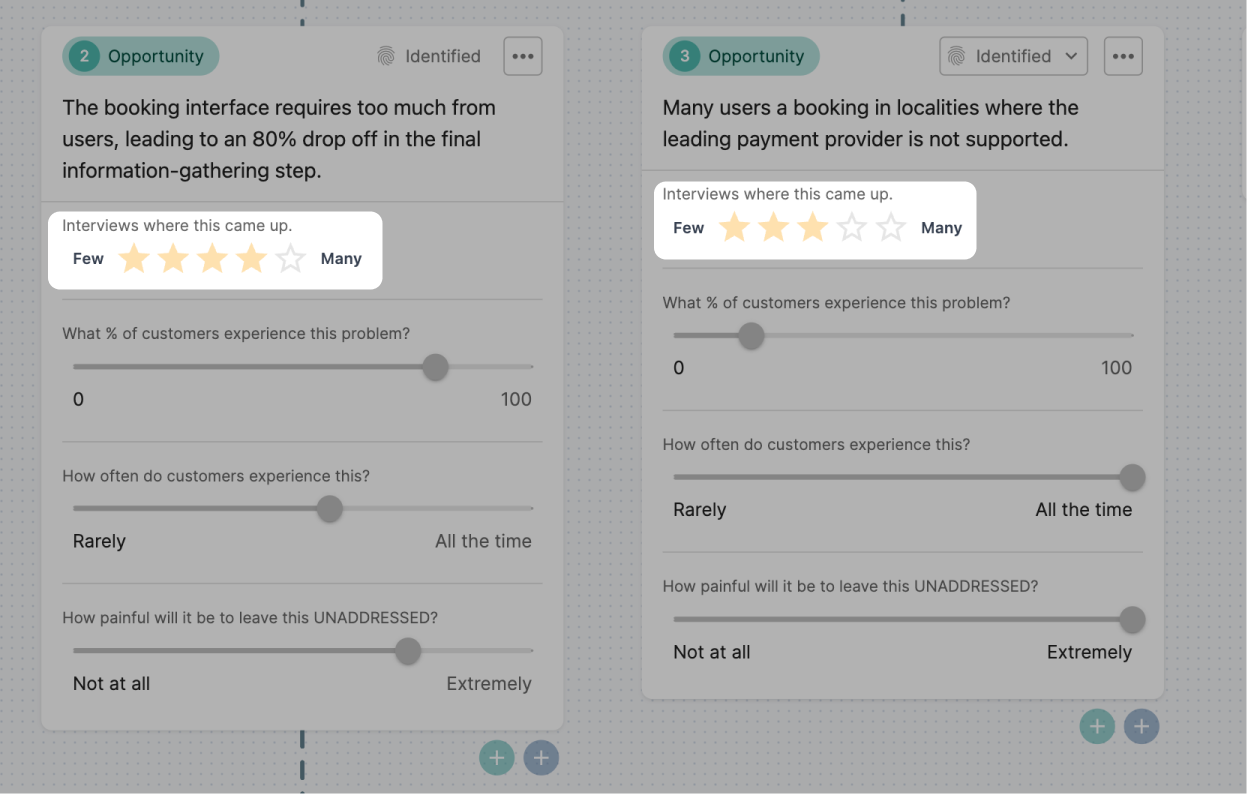

Before Else holds the workshop with additional team members and stakeholders, she prepares the tree by ensuring that the supporting data lives on each opportunity so everyone involved can fully wrap their heads around the opportunity space.Else: “Before I start pruning, I bring in other data sources. This is why I love Vistaly so much. On an opportunity card, I can connect insights that come from interviews, but I can also put in anything that comes from product analytics data; I can feed in survey results or feedback I hear from my colleagues. It becomes this wealth of information on the opportunity cards rather than having my data from interviews live completely separate from everything else.”As part of that process, Else sizes opportunities using Vistaly’s custom fields to visualize how big each opportunity is relative to one another.

Else: “Sometimes I use the opportunity sizing techniques that Teresa Torres recommends like customer reach, does this fit our vision and strategy?, etc., but sometimes I don’t, and I just have a star rating scale for ‘Does this appear in a lot of interviews?’, and ‘Is this something I saw in product analytics?’”

Workshop – First 60 minutes (Understand)

Once Else’s sure tree contains the information that the rest of the team needs, she schedules the workshop to clean it up.Else: “I prefer to work async, but that can be very hard to get people to do. So sometimes I just book a two-hour workshop, and we just sit there in silence for one hour while everyone reads through the OST themselves, and then we continue with the workshop part.”

Workshop – Next ~40 minutes (Align & Prune)

Once the team has had time to take in all of the opportunities, Else move onto interactive part of the workshop, which she describes as “80% pruning and 20% restructuring.”Else: “First, I check with the team to see if they agree with the opportunities based on how it looks today. That’s step one. What I did last time was set up a filtered tree, and I had the original tree. I went through the filtered view and said, ‘If we only look at things that came up in at least five interviews, or that are validated by our historical user analytics data, or that are validated by this survey, then it looks like this. How do we feel about that?”After collecting initial feedback, Else moves on to the actual pruning.

Else: ”I think that it’s important that you delete because that’s where you cut out the noise, and I think you should cut out things that you haven’t heard at least three, four, or five times, at least. In Vistaly, you can archive them so they’re not gone forever. It really helps to show this filtered version of the tree because then people see it and respond with, “Oh, this looks great. We actually want to get here.” You create this kind of ‘end state’ that everybody wants to get to, making it much easier to get over this hurdle of kicking out opportunities that are actually noise.”Q: How often do you prune your OST?

Else: “That’s the thing. Pruning is also iterative. We prune it once, and then it becomes unruly again, and you prune it again. I think the first time we pruned was after 15 interviews.”

Workshop – Last ~20 minutes (Structure)

After pruning the tree, Else and the team work to restructure the tree and move opportunities around. This is the remaining 20% of the second hour.Else: “When you delete opportunities, the structure becomes clear, and it’s just shifting things around. When you delete so many of these horizontal branches, it becomes much easier to add the structure and figure out where the parents and children are – the whole thing becomes a lot less unruly.”

Workshop – Opportunity Selection

During the pruning workshop, Else writes down the top two opportunities that surface throughout the workshop as the team discusses what to remove and how to restructure the structure. This sets the stage for ideating and evaluating solutions to address the highest-value opportunities.Else: “I often write down the number one and two opportunities that we really want to focus on. We’re just in that space [after pruning and restructuring], that we’ve already made a decision between one and two.”This is a great technique to create additional focus, further narrow your scope, and avoid tendencies of an Indecisive Mapper.

What’s next?

Else: “I then create the space for generating a bunch of solutions to that number one opportunity. This is all Teresa Torres’ framework that I’m trying to follow. There is a lot of research behind why it makes sense to generate multiple solutions to one opportunity and why it’s important to focus on one opportunity and not try to attack multiple opportunities at once. She lays out very eloquently in her book and her course material why this makes sense. So, we ideate a bunch of solutions and quickly dot vote. We’re not voting for the idea we think is coolest, but we’re voting for the idea that is most likely to address the opportunity, and drive the outcome for our ICP.”